Week 3: Evaluating Programs & Human Performance Technology

All too often instruction is developed with little thought as to how evaluation of learning or the effectiveness of the instruction will take place. When evaluation is considered on the front end of the instructional design process, it is often limited to evaluating whether the instructional design is more effective than traditional methods.

Instructional Design Evaluation focuses on measuring instructional objectives and what knowledge learners acquired through training or instruction. Merit, worth, and value are the goals of evaluation. Three evaluation models of instructional design are CIPP, Five-Domain, and Kirkpatrick’s. Two other evaluation models are Brinkerhoff’s Success Case Method and Patton’s Utilization-Focused Evaluation (U_FE).

Brinkerhoff’s Success Case Method

Robert Brinkerhoff published his book The Success Case Method in 2003. The Success Case Method (SCM) analyzes successful training cases and compares them to unsuccessful training cases. The SCM utilizes 5 steps to measure human performance by analyzing those that did not use their training, those that used some of the training but did not see any improvement in performance (the largest group, see graphic above), and those that successfully used the training to improve performance. The focus of the evaluation is directed at how successful the learners and/or organizations use the learning and not at the actual training.

Robert Brinkerhoff published his book The Success Case Method in 2003. The Success Case Method (SCM) analyzes successful training cases and compares them to unsuccessful training cases. The SCM utilizes 5 steps to measure human performance by analyzing those that did not use their training, those that used some of the training but did not see any improvement in performance (the largest group, see graphic above), and those that successfully used the training to improve performance. The focus of the evaluation is directed at how successful the learners and/or organizations use the learning and not at the actual training.

The 5 steps to SCM are:

- establish and plan the evaluation

- define the program goals and benefits of the training

- conduct a survey to identify learner success and/or lack of success from the training

- carry out interviews to document success in applying the training

- formal report on the findings from the evaluation

The objective of the formative evaluation is to assist organizations and trainers on environmental factors that promoted success, how much value is obtained, the overall impact of the return-on-investment, and the widespread success from the training. The scope of the SCM will include responses from named individuals to further assist evaluators to coordinate interviews. Results of the unsuccessful interviews will help identify those factors that inhibit the training from being implemented partially or completely. The end goal of SCM is to evaluate learner “buy-in” to the prescribed training and how effectively the training is used in day to day operations.

Student “Buy-In”

This past year I used a modified version of the Success Case Model to evaluate my Digital Video & Audio Design class. I spoke with students I felt were successful in the class not only through their grade but how engaged they were throughout the year. The major difference between my modified version and Brinkerhoff’s SCM is the order I approached the evaluation and there was not any formal report on my findings.

The purpose of my evaluation was to provide student feedback for this first year course and the curriculum I created for it. It would not be too difficult for me to follow the SCM steps to discover how my students are using instruction/training from my class. One area I am most interested in are the barriers and limitations that prevent students from being successful and engaged in the class. Are the barriers for unsuccessful students environmental factors, my teaching strategies, project-based learning, etc?

Patton’s Utilization-Focused Evaluation

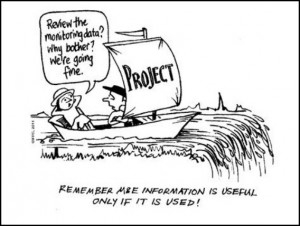

All to often processes are evaluated without any focus or direction to utilize the findings. Evaluation may be conducted to simply assess processes and trainings without the feedback for the intended users. Patton’s Utilization-Focused Evaluation (U-FE) has been around since the 70’s and is still being used today. Evaluation sometimes may be compared of as the old saying, “we have a meeting just to say we had a meeting.” There has to be a purpose for the evaluation and it has be used.

All to often processes are evaluated without any focus or direction to utilize the findings. Evaluation may be conducted to simply assess processes and trainings without the feedback for the intended users. Patton’s Utilization-Focused Evaluation (U-FE) has been around since the 70’s and is still being used today. Evaluation sometimes may be compared of as the old saying, “we have a meeting just to say we had a meeting.” There has to be a purpose for the evaluation and it has be used.

The objective for U-FE is to align the evaluation goals with the how the intended users plan on utilizing the evaluation results. For the evaluation to be successful, the intended users must be engaged and part of the evaluation process. This provides a sense of “ownership” for the intended user and they will be more willing to provide authentic findings for the evaluators during each step of the evaluation process. There many steps of the U-FE process and the nine listed below are those major steps of the process:

- Perform a readiness assessment to evaluate the commitment level of the organization

- Identify intended users and build a relationship of engage with user

- Conduct a situational analysis of environmental factors that might have an effect on the results

- Identify the intended users and build a process to continue the evaluation, communication, and learning after the U-FE is completed

- Focus the evaluation to address questions and issues of the intended users

- Evaluation should be designed to report relevant findings

- Data collection will be analyzed and translated for the intended users

- The evaluation results must be actively used and may need guidance to insure its use

- A quality control of the findings (metaevaluation) must be revisited to determine the extent, additional uses, and misuse of the evaluation was achieved

To create a successful evaluation the intended users and the evaluator must be engaged and committed throughout the utilization-focused evaluation. The U-FE approach may be used independently or it may be used to supplement other evaluation models.

Students as Intended Users

One method I might be able to use the Utilization-Focused Evaluation process in my classroom is to allow my students to be the evaluators. Students can conduct peer evaluations of completed projects and provide feedback to each other. This provides the students the level of ownership in the evaluation process and allows them to constructively critique other student’s work. Students are aware of factors that might be limiting student success in the classroom. An advantage for this approach is students learn from each other and they sometimes have a way of “wording” things differently from the teacher. The quality control can be conducted with the students and myself.

Instructional Design Evaluation

Instructional Design vs. Traditional Methods

Here are a few questions instructional design evaluation should address when compared to traditional methods of instruction. Are students actively participating in the learning process? Are the delivery methods of the instruction engaging and relevant for the desired outcomes? Another question, what environmental factors are supporting or preventing learning in the classroom? Are some areas of the instruction working better than others and why? How much more value can be added to the instruction process? Lastly, is the feedback on student performance findings being used to improve the instruction?

Return on Investment and Instructional Programs

The return on investment (ROI) methodology should always be considered for instructional programs. The landscape of technology is constantly changing and being updated. This constant change and the need for authentic and valid instructional programs is a challenging path for schools. A school’s technology objectives and its contribution to student success should be a driving force when investing in new and current technologies. Educators should follow the ROI Process Model to support budgets with the impact and consequences new technology has on education. The ROI Process Model should begin with an evaluation plan, collect data, analyze the data, and report on the findings. Other measures such as process improvement, time to deploy instructional programs, back-end personnel needed to support programs, and enhance the image of the school should be considered.

Performance Improvement

A performance problem in my school is the gap between teacher competency and “buy-in” of new technology to increase efficiencies in and out of the classroom. One of the reasons for the gap are the demands put on teachers that are beyond the instructional process. The demands to increase test scores, manage increased paperwork, plan lessons, and tutorial requirements all put a heightened level of stress on teachers. Managers in the corporate world have administrative assists to help manage the daily operational processes and scheduling requirements. Teachers have to manage and organize the daily p rocesses and stay current on their instructional processes.

Several years ago, Garland ISD created master trainer positions on each campus to help facilitate technology implementation and training for teachers. Over the last couple years, the district has began to hold master trainers accountable for facilitating technology tools to assist teachers during instruction and operational processes. Garland ISD is aware of the opportunities technology offers to improve performance in and out of the classroom. Before any technology is put in the hands of teachers or students, the district’s technology implementation plan provides adequate training for teachers to be competent in the new technology, its benefits, and proper ways to implement technology in the classroom. Support and management systems are part of the roll-out process for new technologies. This roll-out process appears to be similar to Gilbert’s Behavior Engineering Model in that master trainers align district objectives with technology to improve teacher behaviors (morale) and overall student performance.

The implementation of Garland Education Online (GEO), a Moodle learning management system, is one of type performance support being implemented district wide. GEO is not only for students, it provides teachers support opportunities for software training on non-critical software (i.e. MS Word and Excel) and grade book tips and new features. Teachers can go access GEO at school or home anytime of the day. This is where the master trainers responsibility becomes apparent as a support system for teachers on campus and are readily available to support and answer questions. For more critical tasks, the district’s performance support will schedule training prior to rolling-out instruction technology in order to build teacher confidence and ability to correctly utilize these new instructional tools.

Resources:

I am fascinated that you have already used a modified version of the Success Case Model for your Digital Video & Audio Design class. I would love to have listened to the results of your evaluation. I am also interested in the barriers and limitations that prevent students from being successful and engaged in the class. I believe that the problem is so varied with each student. However, evaluative processes like these that measure student interest, barriers, and limitations would help us to understand a little better about how to structure our classes. When I looked at your blog the pictures were not there so I copied and pasted to a word document and was able to see your graphics. Nice. I loved the comic about the “project” boat going off the cliff with the caption, “remember M & E information is useful only if it is used” which is what the Patton U-FE is all about. I like how you allow students to be evaluators and give feedback to each other in your classroom. We also have new technology coordinators like Garland does. They are helpful and do help for teachers to buy in. The best way to get teachers to try new technology is to have them sit there and actually use the programs and show how they can help in their classes. We are also using Moodle in Mesquite as well. I really enjoyed your blog. It was very informative and detailed. I like your work.

Brian, I am guessing you teach high school, and maybe students in this age group/level are more candid about their feedback to peers. I teach 4th grade and this year more than ever, students wanted to be “nice” and not “hurt their friends’ feelings” so it was really tough to make this particular strategy, peer feedback, successful. Previously, I have had groups that dare be more honest in their opinions and use of rubrics with each other, and when that happens, so does learning. I recall a student that no matter how I explained long division he could not understand it. I asked one of my more confident students, Jose, if he could help Sam understand division. They worked at it for a whole week and on Friday, when it was time for volunteers to show us the process of long division, Sam volunteered. I looked over at his tutor, wanting some reassurance that Sam could do this, he couldn’t take many more blows to his confidence. Jose smiled and loudly said “Mrs. Ro, no worries, Sam’s got this. Right, dude?” Guess what? Sam amazed us with his division skills, and I cannot claim any credit for it. I think honesty is a must for U-FE, and this year, it was hard to come by. I’m going to explore ways I can build up students confidence to understand that their honest and fair evaluations actually help their peers, rather than insult them.

Garland ISD is definitely being proactive in teacher buy-in when it comes to technology. It saddens me that at my campus our principal has spent all of her budget on technology, COW Carts, SmartBoards, clickers, doc cameras, iPads, flip cameras, just to mention a few advances we have, and I see them gathering dust. In another technology class I posted my thoughts on this and titled the post “Is it okay if I hang this from my projector?”, which is what I have seen projectors used for. The few of us that are experimenting with the technology and that go to training after school and on weekends have tried to help others but it is, as you mentioned in your post, one more drop in the overflowing cup of responsibilities teachers have. I think accountability is a huge factor in this, I think that Garland ISD demonstrates a sense of urgency when it “inspects what it expects” of master trainers. I think principals that purchase all this equipment for the benefit of all the digital natives in the classroom should also be holding teachers accountable for the educational use of this technology, if not the ROI will always be in the red, and it would be an askew view because it is more about the teachers than the students learning that is dictating the results.

Ro, I do teach high school and high school students are still candid with their feedback to peers. I don’t think most students have been asked to constructively critique their peers. Peer evaluations is always a challenge because they either don’t want to do it or are afraid they will hurt another student’s feelings. I always try to reiterate the constructive part when critiquing. Garland ISD does seem to be proactive in regards to managing funds since we are a “property poor” district. Our new new superintendent, Dr. Morrison, is very pro-technology and is in favor of seeing technology in the hands of teachers and students. He has mentioned teachers will understand technology before they roll it out to students. So far I haven’t noticed a lot of wasteful on technology. I’m sure there has be overspending/under-use in technology but the ROI for technology does appear to be in the forethought of district administrators. I guess time will tell. In closing, I hope to be a master trainer on my campus next next…we’ll see.

As you were concluding the SCM model of evaluation, you mentioned your desire to understand the barriers and limitations that prevent student success. Through your statement I can see you are already utilizing a very important element of evaluation and that is doing some introspection with regards to outcomes. In some fields of study, you can isolate and carry out experiments and record reactions and eventually maybe discover formulas and patterns that simplify matters. But in the education field it’s not so easy. There are a lot more variables in out typical classrooms that make it a little more difficult to figure out what could work better. Maybe there’s an app somewhere!